Yet Another Kubernetes Intro - Part 7 - Storage

It’s time for part 7 in my introduction to Kubernetes. In the previous post, I talked about configuration. This time, it is all about storage!

Managing storage in Kubernetes is definitely worth a post on its own! The reason for this isn’t really because it is overly complicated as such, it’s more because there are a few different options that I want to cover.

In the Docker world, when we need persistent storage, we mount volumes inside of our containers. These volumes are either mapped to directories on the host, as storage in a separate container, or backed by different types drivers that allow us to map a whole heap of different storage back-ends as volumes. The latter is a paradigm that is well supported in Kubernetes as well. However, it also supports a slightly more complex and flexible system on top of that. But let’s start by looking at how we can do it the “Docker way” first.

Mounting storage volumes

“Basic” volume mapping in Kubernetes is very similar to what we do in Docker. We choose a storage driver/plug-in that supports the storage back-end that we want to use, and then we configure our container to use that plug-in for our mounted volume. “All” we need to do, is figure out what plug-in to use, and how to configure it. Each plug-in requires different configuration for somewhat obvious reasons. Also, the plug-in needs to be installed in the cluster as well. A out of the box cluster does not come pre-configured with a bunch of different storage plug-ins. Instead it is up to use to choose what we want to install. However, most hosted clusters come pre-configured with plug-ins that makes sense for the provider. For example, an Azure AKS cluster will have plug-ins for Azure Files and Azure Drives, while an AWS EKS cluster will have a plug-in for AWS Elastic Block Store.

Note: If you want to see what plug-ins are available in K8s, and how to configure them, have a look at https://kubernetes.io/docs/concepts/storage/#types-of-volumes

When mounting a volume in this way, by specifying the volume in the Pod definition, the lifetime of the volume is defined by the lifetime of the pod. This means that the volume will live as long as the pod lives, and then disappear when the pod is removed. However, if you use a storage plug-in that stores the data in a persistent way externally, in for example Azure storage, the actual storage is generally not removed. So it won’t cause a huge problem in most cases.

Setting up a cluster to play with

So far, when writing my the posts in my intro to K8s series, I have used Docker Desktop for all of the demos, which has worked fine. However, for this particular post, I have decided to use an Azure Kubernetes Service (AKS) cluster instead. The reason for this is that the storage plug-ins need to be installed and configured in the cluster to work. But, as mentioned before, when you use an managed Kubernetes service, you generally get one or more plug-ins pre-configured in the cluster to support the provider’s storage back-end. So to make it simple, I will just spin up an AKS cluster and use the built in plug-ins, so that I can focus on the usage and not the set-up.

Creating an AKS cluster is a piece of cake, as long as you have an Azure account. I assume it is just as simple if you are running AWS or Google Cloud. Having that said, I do suggest making a few tweaks to the AKS set up when creating a cluster that you just want to play with. First of all, scale it down to a single worker node. This saves a lot of money, and the control plane/master nodes are provided by Azure, so they won’t use any resources. In this way, the only cost is a single VM. But use the default VM size. It might be a bit pricey, but it is fast enough to be fun to play with, and supports adding premium storage. Secondly, turn off the creation of an Analytics Workspace. When playing around, there is very little need to monitor the nodes. Other than that, all the defaults make sense for a demo cluster.

Note: In a production scenario, there are other choices that should be made. But for playing around and demoing things, this is a quick way to get started.

This will generate a bunch of resources for us, in a couple of different resource groups. First of all, it creates the AKS resource in the selected resource group. But it also creates a second resource group that is used to place the other resources, such as networking and worker node VMs. The reason for this is that the AKS resource needs to have the right access rights to the other resources to be able to do its job (scaling up and down etc). And placing those things in a separate resource group, that the service principal connected to the AKS resource has access to, makes the whole security aspect pretty simple.

Mounting Azure storage as volumes

By default, there are 2 types of Azure storage that can be used when mounting a volume in AKS, Azure Disks and Azure Files. So what’s the difference?

Well…an Azure Disk is basically a VHD stored in Azure storage. Mounting one of these in a pod, means that the Azure Disk gets mounted as a hard drive on the worker node, and then mounted inside the pod at the specified path. At least I assume that is the way it works. This means that Azure Disks are a bit limited when it comes to Kubernetes, as a disk like this can only be mounted to one VM (worker node) at a time. In a load balanced environment with pods running on multiple worker nods, that can be somewhat limiting in a lot of cases.

Warning: Don’t quote me on the actual implementation of Azure Disks in AKS… I’m pretty much guessing that this is the way that it works based on the multiple warnings I have seen regarding using Azure Disks like this.

Azure Files on the other hand, are basically blob storage exposed as a network share. This means that multiple worker nodes can map the storage area as a network share simultaneously, allowing pods across multiple nodes to use the same storage. Because I find this more useful in a lot of cases, I’ll be using an Azure File for this particular demo. But it might be good to know that using an Azure Disk is very similar…

Mounting an Azure File

First of all, we need to create an Azure storage account and an Azure File. This is fairly easy to do using a script similar to this

$resourceGroup=storage-demo

$accountName=aksstoragedemo

$location=northeurope

$fileName=storagedemo

az storage account create -n $accountName -g $resourceGroup -l $location --sku Standard_LRS --kind StorageV2

$connectionstring=$(az storage account show-connection-string -n $accountName -g $resourceGroup -o tsv)

$key=$(az storage account keys list --resource-group $resourceGroup --account-name $accountName --query "[0].value" -o tsv)

az storage share create -n $fileName --connection-string $connectionstring

As you might have guessed, this creates a storage account called aksstoragedemo in the resource group storage-demo. It then pulls out the connection string and key for that account, and use those to create a new Azure File (or Azure file share).

With those resources in place, we need to store the credentials for the storage account in the cluster so that the system can access it. They are stored in a Kubernetes secret resource like this

kubectl create secret generic azure-secret --from-literal=azurestorageaccountname=$accountName --from-literal=azurestorageaccountkey=$key

Ok, now that we have the storage account and a file share, and the credentials are available in the cluster, the system is ready to mount the file share in a pod. This is done by adding the following configuration to the pod spec

apiVersion: v1

kind: Pod

metadata:

name: storage-demo

spec:

containers:

- name: my-container

image: zerokoll/helloworld

volumeMounts:

- name: azurefile

mountPath: /azure

volumes:

- name: azurefile

azureFile:

secretName: azure-secret

shareName: storagedemo

readOnly: false

As you can see from the pod spec, we are defining a volume called azurefile. This volume is supposed to use the Azure File plug-in, so we add an azureFile entry that specifies the name of the K8s secret that contains the storage account name and key (azure-secret). Next, we it the name of the file share to use (storagedemo), and that it shouldn’t be read only. And finally, that volume is mounted in the container by adding it to the list of volumeMounts, specifying the name of the volume (azurefile), and the path to mount it at (/azure).

It might be worth noting that when adding this pod to the cluster, it might take a few seconds to get started. This is because the worker node has to mount a new network share before it can mount it as a volume it in the pod. But it shouldn’t take too long.

Note: If you decide to use an Azure Disk instead, it takes a bit longer the first time it is mounted. That’s because the disk needs to be formatted the first time it is being used.

Once the pod has been started, it is easy to verify that the volume is working. Just connect to the running pod using kubectl exec -it like this

kubectl exec -it storage-demo bash

This will connect to the pod and run bash in “interactive” mode, allowing us to play around inside the pod. To verify that the volume has been successfully mounted, we can list the contents of / like this

ls /

app azure bin boot dev etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

And as you can see from the list, there is a directory called azure, just as expected. And now that we know that the directory is there, we can try and create a file inside it like this

echo 'test' > /azure/test.txt

To verify that the file has been created, and contains the expected data, we can run

cat /azure/test.txt

test

Tip: If you are following along on your machine, leave the terminal connected to the pod.

Now that we have seen that we can mount the file share as a volume in a pod like this, let’s see what happens if that same file share is mounted in another pod. To do that, we need a another pod definition. It can be pretty much identical to the previous one, except for the name property which has to be changed. If we don’t, it will be seen as an update to the existing pod instead of the creation of a new one

apiVersion: v1

kind: Pod

metadata:

name: storage-demo-two

spec:

containers:

- name: my-container

image: zerokoll/helloworld

volumeMounts:

- name: azurefile

mountPath: /azure

volumes:

- name: azurefile

azureFile:

secretName: azure-secret

shareName: storagedemo

readOnly: false

As the current terminal is still connected to the first pod, we need to open another one, and create the second pod from that. Once this second pod is up and running, we can connect to it using the second terminal window

kubectl exec -it storage-demo-two bash

Once connected, we can look at the contents of the text file we just created in the first pod by running

cat /azure/test.txt

test

Awesome! We now have persistent storage that is shared between 2 different pods!

However, as I mentioned in the beginning, there is actually another way to handle storage in Kubernetes. It is something called persistent volumes.

Persistent Volumes

Persistent Volumes is a little bit different than just mounting a storage back-end in a pod. Instead, it allows us to abstract away the actual storage implementation in a way that allows a pod to define that it needs storage, and have the cluster provide the implementation in whatever way it has been configured to. Basically giving us a Kubernetes resource that represents a piece storage what can be used by an application. This also allows us to tie the lifetime of the volume to a separate K8s resource instead of a single pod.

This system is based around 3 different resource types in K8s, PersistentVolume (PV), PersistentVolumeClaim (PVC) and StorageClass (SC). These work together to provide a fairly easy to use storage system that allows pods to consume storage in a structured way, without having to know the ins and outs of the storage that is to be used.

The problem with talking about persistent volumes and K8s storage is that there are 2 different ways that it can consumed. It can either provide storage in what is called “static” mode, or in “dynamic” mode. In static mode, an administrator pre-provisions volumes that pods can request and use. However, it requires someone to provision enough volumes to satisfy the needs in the cluster. In dynamic mode on the other hand, and administrator defines what type of storage can be consumed, and then, when a pod requests some storage, K8s can dynamically provision a volume for the pod to use.

Statically provisioned persistent volumes

Let’s start by looking at the static version of PVs, where we pre-provision volumes in the cluster. In this mode, an administrator defines a set of PersistentVolume resources in the cluster. Each representing a pre-provisioned storage area that is available to used by pods. The PV defines the storage plug-in to be used, as well as the plug-in specific configuration, the size of the storage it represents, the access mode and something called a storage class. The creation of a PV resource causes the defined storage plug-in to prepare a corresponding storage area for use.

To create a PersistentVolume that uses an Azure File, we can use a spec that looks like this

apiVersion: v1

kind: PersistentVolume

metadata:

name: azurefile

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

storageClassName: azurefile

azureFile:

secretName: azure-secret

shareName: storagedemo

readOnly: false

mountOptions:

- dir_mode=0777

- file_mode=0777

- uid=1000

- gid=1000

- mfsymlinks

- nobrl

As you can see, it contains a lot of the same configuration that we used in the regular volume mount example above. However, it also defines a size, 5Gb in this case, an access mode, and a storage class. And finally, this spec defines a bunch of mount options for the volume.

The accessMode value defines how you are allowed to access the data. ReadWriteOnce means that it can be mounted as read/write in a single pod. ReadOnlyMany means that it can be mounted as read only in many pods. And finally, ReadWriteMany allows multiple pods to mount it in a read/write fashion.

Note: Not all volume plugins support all modes. Make sure you check what your plugin supports

The storageClassName is a string of your choice, that defines the “class” of storage that this PV provides. You can use this to define different types of storage, such as for example fast for SSD based PVs and slow for spinning disks. This can then be used to select the type of storage our pods need.

Once we have a PersistentVolume resource in place, we can create another resource of a type called PersistentVolumeClaim. A PVC is responsible for “claiming” a PV. These two types live in a one to one relationship. This means that when a PVC is created, the system finds a PV that fulfills the PVCs requirements, and binds it to the PVC. This makes it impossible for any other PVC to claim that same volume. Any PV without a PVC is up for grabs by a future PVC.

The PVC defines the requirements it has for the PV that it should be bound to. It does this by defining the minimum storage size it requires, and the storage class that it requires. It looks something like this

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

accessModes:

- ReadWriteMany

volumeMode: Filesystem

resources:

requests:

storage: 5Gi

storageClassName: azurefile

As you can see, this PVC needs the access mode to be ReadWriteMany, and requires the selected PV be at least 5Gb, and have the storage class name azurefile. Luckily, all these requirements are fulfilled by the PV we defined above. So adding this PVC to the cluster will bind this PVC to the PV that we just added.

It’s probably worth mentioning that setting the storageClassName to "" does not mean that any class will work. Instead, it explicitly maps to PVs with the storage class set to "". However, not supplying a class at all, will result in one of two options depending on whether or not a default storage class has been defined. If it has, the cluster will infer the default storage class on any PVC that doesn’t define one on its own. If not, it is treated in the same way as setting it to "".

Note: On top of the storage class, the PVC can also define a label selector to further limit the PVs it can use. This way a set of PVs can be provisioned and “tagged” for a specific purpose.

But what happens if we create a PCV that has requirements that cannot be fulfilled by an available PV? Well, in that case the PVC will stay in an “unbound” state, which makes the PVC useless, until a new PV that fulfills the requirements is created.

Once you have added the PVC to the cluster, and got it bound a PV, you can mount it as a volume in a pod like this

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

containers:

- name: hello-world

image: zerokoll/helloworld

volumeMounts:

- mountPath: "/mnt/demo"

name: my-volume

volumes:

- name: my-volume

persistentVolumeClaim:

claimName: my-pvc

And this shows off the cool part of using PVs and PVCs! The pod mounts a PVC. It doesn’t care about the storage mechanism being used. It doesn’t need to configure anything. All of that is handled by the PVC and PV. All the pod needs to know is the name of the PVC it should use, which is very flexible.

Ok, so now we know how to create persistent volumes and persistent volume claims, as well as how we mount a PVC in a pod. But what about deleting the resources? Well, that can be done just as you would delete any other resource using kubectl delete. However, there are checks in place that stops us from deleting PV/PVCs that are in use. Trying to delete a PV or PVC that is currently mounted in a pod will just set its status to Terminating. A state it will keep until all pods that are using the PV/PVC are removed. At that time, the resource will be removed.

If you want to see the status of a PV or PVC, you can just use the trusty kubectl describe

kubectl describe pvc my-pvc

Name: my-pvc

Namespace: default

StorageClass: example

Status: Terminating

Volume:

Labels: <none>

Annotations: volume.beta.kubernetes.io/storage-class=example

volume.beta.kubernetes.io/storage-provisioner=example.com/hostpath

Finalizers: [kubernetes.io/pvc-protection]

…

In this case the PVC my-pvc currently has a Terminating status. This means that someone has tried to delete it, but it is currently bound to a pod and cannot be deleted. However, as soon as the last pod that references my-pvc is deleted, the PVC will also be deleted. And when a PVC is deleted, the bound PV will “reclaimed” in one of 3 ways. Every PV definition can define how the PV is reclaimed. The 3 available options are:

Retain keeps the volume in the cluster, but does not make it available for use by other pods, as it probably contains data from the previous usage. Instead the administrator has to manually take care of it. This means deleting, and optionally recreating the PV. Preferably cleaning the used storage before recreating the PV to make sure that any new PVC doesn’t come pre-populated with data. Unless that is the intended situation of course…

Delete deletes the PV, as well as the backing storage (if that is supported by the storage plug-in).

Recycle deletes the content in the volume before making it available for a new claim. This requires the selected storage plug-in to supports it. However, this mode is deprecated and should be replaced by dynamic provision, which I will cover a little but later.

To select which one you want to use, you set the persistentVolumeReclaimPolicy on the PersistentVolume resource.

Dynamically provisioned persistent volumes

So far I have talked about PVs that have been pre-provisioned by someone. However, in a lot of cases it would be pretty useful to be able to request a storage volume without having to have someone provision it for us ahead of time. And that’s where dynamically provisioned volumes come into play.

In this mode, we do not create PV resources. Instead we create StorageClass resources. These are resources that contain the configuration needed to be able to dynamically provision PV in the cluster. These resources are then mapped using the storageClassName property.

Note: This means that setting the storageClassName property to "", will effectively disable dynamic provisioning

A StorageClass specification can look like this

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: azurefilestorage

provisioner: kubernetes.io/azure-file

parameters:

skuName: Standard_LRS

location: northeurope

storageAccount: demo-storage

This configures a StorageClass called azurefilestorage that uses the kubernetes.io/azure-file provisioner to provision PVs when needed. The configuration provided, in this case the storage SKU, the location and the storage account, is dependent on the selected storage provisioner.

Any PVC that sets the storageClassName to azruefilestorage will cause a new Azure File based PV to dynamically created. Which in turn will cause a new Azure file share to be created in the storage account called demo-storage.

Warning: Depending on the selected provisioner, this can incur extra costs. Storage isn’t free. So even if dynamically provisioning storage is really cool and flexible, you probably want to keep track of the costs that it incurs.

In AKS there are 2 StorageClasses that are added the cluster by default, one is literally called default, and one is called managed-premium. These will automatically provision an Azure Disk when used. The difference between the two is that the default one will use slower, cheaper storage, and the managed-premium one will use faster, but more expensive premium storage.

kubectl get sc

default (default) kubernetes.io/azure-disk 8m58s

managed-premium kubernetes.io/azure-disk 8m58s

To use them to dynamically create a PV for us, we just need to create a PVC that defines the required storage class. Like this

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: azure-managed-disk

spec:

accessModes:

- ReadWriteOnce

storageClassName: default

resources:

requests:

storage: 1Gi

This creates a PVC called azure-managed-disk using the default storage class. And having a look at the created PVC gives us this

kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

azure-managed-disk Pending default 9s

Ehh… Pending? Well, it does take a few seconds to get a disk set up in storage. But, re-running the command a few seconds later gives us this

kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

azure-managed-disk Bound pvc-b24a571d-1ba6-47e4-9a58-532f7fcbd5a6 1Gi RWO default 63s

Remember: As mentioned before, Azure Disks should not be used for read/write in several pods. But for this demo, that is quite fine as I only have one pod on a single node.

Having a deeper look at the PVC using kubectl describe gives us a bit more information

kubectl describe pvc azure-managed-disk

Name: azure-managed-disk

Namespace: default

StorageClass: default

Status: Bound

Volume: pvc-b24a571d-1ba6-47e4-9a58-532f7fcbd5a6

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"PersistentVolumeClaim","metadata":{"annotations":{},"name":"azure-managed-disk","namespace":"default"},"spec":{...

pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: kubernetes.io/azure-disk

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 1Gi

Access Modes: RWO

VolumeMode: Filesystem

Mounted By: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ProvisioningSucceeded 4m8s persistentvolume-controller Successfully provisioned volume pvc-b24a571d-1ba6-47e4-9a58-532f7fcbd5a6 using kubernetes.io/azure-disk

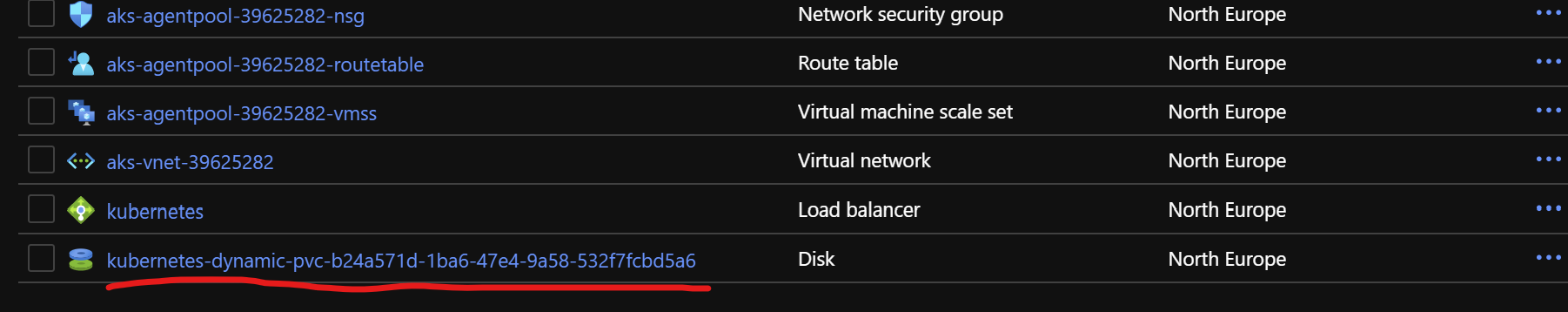

The most interesting thing here is the last row that says Successfully provisioned volume pvc-b24a571d-1ba6-47e4-9a58-532f7fcbd5a6 using kubernetes.io/azure-disk. And why is that interesting? Well, because if we go to the Azure portal and have a look at the resource group that was automatically created during the creation of my cluster, we can see the following

Note: The automatically created resource group will have a name starts with MC_

This is the Azure Disk that was automatically created for us when the PVC was added to the cluster.

Now that the PVC is in place, it can be mapped as a volume in a pod like this.

apiVersion: v1

kind: Pod

metadata:

name: storage-demo

spec:

containers:

- name: my-container

image: zerokoll/helloworld

volumeMounts:

- mountPath: "/azure-disk"

name: azure-volume

volumes:

- name: azure-volume

persistentVolumeClaim:

claimName: azure-managed-disk

Note: It takes a bit of time to get this pod up and running in the cluster as the disk needs to be formatted before first use, which takes a little while.

Once the pod is up and running, we can attach to the pod using kubectl exec -it, and make sure that the mounted volume is available by running

ls /

app azure-disk bin boot dev etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

And as expected, there is a directory called azure-disk.

Once the pod has filled its purpose, it can be deleted together with the PVC, which in turn deletes the PV resource as well. The actual deletion can take a little while though. And during that time, if you kubectl describe the PV, you will see an error message saying that disk deletion failed because the disk is currently attached to a VM… But after a while, it gets removed as it should. The VM just need to detach it first, which can take a few seconds.

But what if we wanted to use an Azure File instead? Well, in that case we have to set up our own StorageClass like this

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: azurefile

provisioner: kubernetes.io/azure-file

mountOptions:

- dir_mode=0777

- file_mode=0777

- uid=1000

- gid=1000

- mfsymlinks

- nobrl

- cache=none

parameters:

skuName: Standard_LRS

This creates a StorageClass resource called azurefile that creates an Azure File using standard, locally redundant storage, whenever a new PVC using this class is added to the cluster. Adding this definition to the cluster gives us 3 different storage classes in the cluster

kubectl get sc

NAME PROVISIONER AGE

azurefile kubernetes.io/azure-file 39s

default (default) kubernetes.io/azure-disk 33m

managed-premium kubernetes.io/azure-disk 33m

With this storage class in place, we can create a new PV using this new storage class by applying a spec that looks like this

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: azure-file

spec:

accessModes:

- ReadWriteMany

storageClassName: azurefile

resources:

requests:

storage: 1Gi

And after allowing a few seconds to get the new Azure File created, we can have a look at the created PVC and dynamically created PV like this

kubectl get pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/azure-file Bound pvc-4015adcd-2170-412c-9659-3abfaa35ee3c 1Gi RWX azurefile 24s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-4015adcd-2170-412c-9659-3abfaa35ee3c 1Gi RWX Delete Bound default/azure-file azurefile 6s

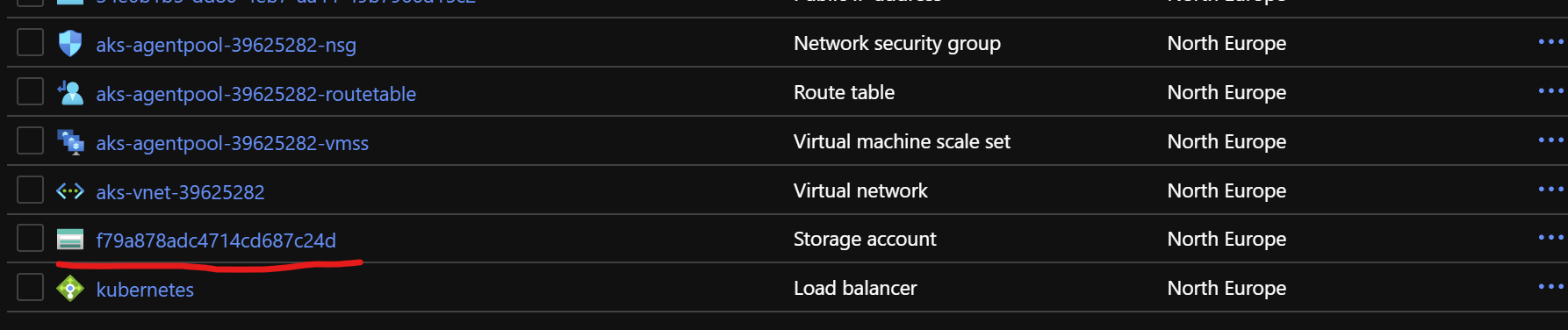

And looking at the Azure Portal, there is now a new storage account resource added

And inside that storage account, there is a 1Gb file share that has been automatically provisioned for us. Just as we wanted!

And with this new PVC in place, we can mount it in a pod in exactly the same way that we did with the Azure Disk. All through the awesome abstraction of PVCs. And as soon as the pod and PVC is removed, the connected PV is deleted, which in turn causes the dynamically created storage account to be removed.

If you don’t want the file share to be automatically removed, you can just change the storage class’ reclaimPolicy to Retain. This will make sure that the PV and file share is left in place when the PVC is removed. However, the PV will be set in a Released state, and will not be re-used by future PVCs. But leaving it in place allows you to attach to the file share and access whatever data was left there by the system. Once that has been done, the PV can be manually deleted. Just remember that you also need to manually delete the file share with this configuration.

Comment: There is obviously nothing that stops you from combining the static and dynamic PersistentVolume provisioning. Just make sure that the storage class names don’t overlap!

I think that is everything you need to know about storage, and then some! At least as an introduction… It might have been a bit deeper than you really needed in an intro. But there is so much useful stuff to cover. These were the overarching parts that I found important. But once again, the cluster configuration and plug-in selection will define what configuration you need to provide.

Go play with your cluster!